Link for the video of Gradient Based Optimization.

Introduction

What is optimization?

Optimization is selecting the best element with some criterion. Optimization problem is maximizing or minimizing functions by choosing inputs.1

Real-life Optimizations Example

- Optimizing the design to maximize the performance.

- Optimizing the recommendation algorithm to show best personalized advertisement.

- Optimizing the factory to spend less time and money.

Mathematical Optimization

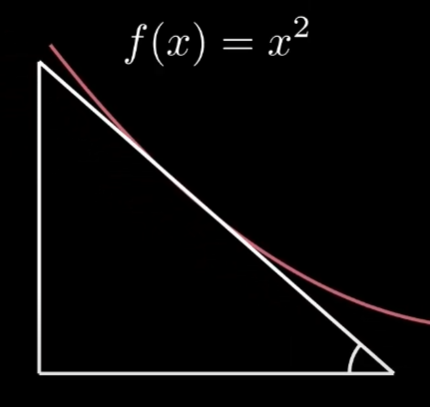

This is just finding that maximizes or minimizes some function . It is easy for some simple functions, i.e. quadratic functions.

To find that minimizes function , we simply differentiate .

is minimized when . However, we want to find parameter values that minimizes the complex function, harder to differentiate symbolically.

Gradient Based Optimization

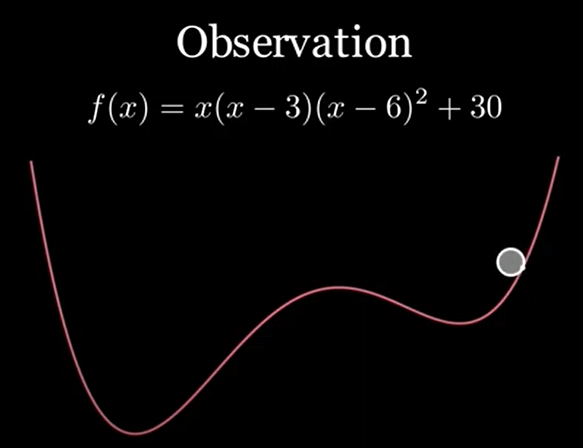

Observations

Assume that global minimum is a only local minimum, and is a value that makes minimum. Then, slope of the function indicates where minimum exists.

- If slope is positive, it means .

- If slope is negative, it means .

Therefore, whatever the value of is, will move value to the direction of . We can do this iteratively, and expect that sequence will be converge to .

Problem: To steep slope

If function is to steep, sequence can diverge. Therefore, we add one hyperparameter, learning rate . Hyperparameter is set before optimization algorithm.2

Problem: Local minimum

We assumed that global minimum is a only local minimum. However, general functions can have local minimum, which is not a global minimum.

Definition

Local minimum is a smallest value in the given range.

With method we build in previous chapter, sequence can easily trapped into the local minimum.

Another Observation

In this example, ball will go to global minimum due to inertia. Therefore, we can apply inertia to our formula.